This tutorial on troubleshooting poller issues is the first installment of a series devoted to the resolution of common issues reported by clients and managed by our teams.

Poller issues generally take two forms

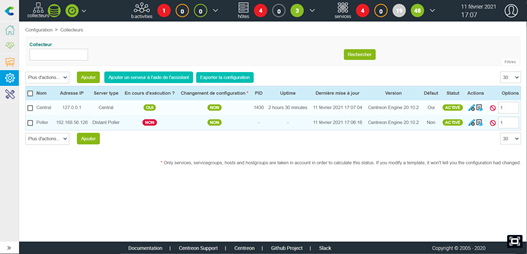

#1: The poller is stated as “not running” on the poller configuration page:

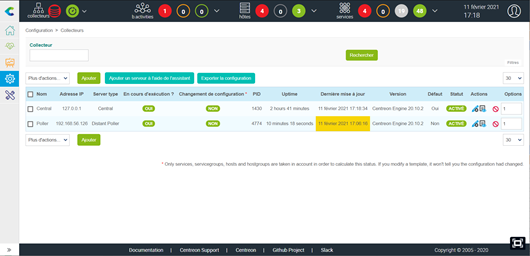

#2: The last update time is older than 15 minutes and highlighted in yellow:

Solve this issue in Centreon 21.04

For the purpose of this tutorial, we will use a simple distributed Centreon platform with the following assets:

- a central server with an embedded DB instance (IP 192.168.56.125)

- a poller (IP 192.168.56.126)

Note that in the following examples, commands will be shown as executed with the root user. Never forget that with great power comes great responsibilities!

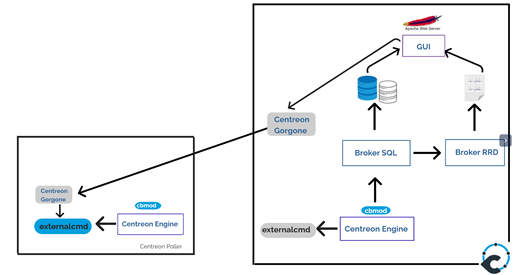

Quick tour: Overview of a Centreon typical architecture

For efficient and targeted troubleshooting, you need to know what the different components of a Centreon platform are, and how they interact with each other. As a quick reminder, here’s a platform example comprising a central server and a poller.

- The poller’s monitoring engine (centreon-engine) executes a bunch of monitoring scripts ( probes) to query the monitored devices.

- Results are returned by the centreon-engine’s cbmod module to the centreon-broker instance on the central (BBDO protocol, TCP 5669).

- The central server’s centreon-broker module handles and processes the results, populating DB tables or generating graphical RRD files.

- The web interface (Apache server and PHP backend) displays the monitoring information to the user.

- The centreon-gorgone module exports to the pollers the configurations made on the interface and launches external commands; centreon-gorgone is a client/server module, active both on the central server and in the pollers (ZMQ protocol TCP 5556)

Now that we know who does what in a Centreon architecture, let’s get to the troubleshooting

Check 1 : network connections

The poller must be connected to the TCP port 5669 of the Central server (BBDO flows). Conversely, the central server must be connected to the TCP port 5556 of the poller (ZMQ centreon-gorgone flows)

The netstat command is helpful to check this, but ss can also be used.

When executing this command on the poller, we should get the following results:

[root@centreon-poller ~]# netstat -plant | egrep '5556|5669' tcp 0 0 0.0.0.0:5556 0.0.0.0:* LISTEN 3761/perl tcp 0 0 192.168.56.126:5556 192.168.56.125:57466 ESTABLISHED 3761/perl tcp 0 0 192.168.56.126:33598 192.168.56.125:5669 ESTABLISHED 3554/centengine

From the Central, the results will be the same, but in the opposite direction:

[root@centreon-central ~]# netstat -plant | egrep '5669|5556' tcp 0 0 0.0.0.0:5669 0.0.0.0:* LISTEN 1436/cbd tcp 0 0 192.168.56.125:57466 192.168.56.126:5556 ESTABLISHED 1781/gorgone-proxy tcp 0 0 192.168.56.125:5669 192.168.56.126:33574 ESTABLISHED 1436/cbd tcp 0 0 127.0.0.1:5669 127.0.0.1:58200 ESTABLISHED 1436/cbd tcp 0 0 127.0.0.1:58200 127.0.0.1:5669 ESTABLISHED 1430/centengine

Should the status be different from “ESTABLISHED”, checking data flows and firewall logs will usually help locate the problem. .

Another common reason of the network connection failure is that the Linux firewall may be running on either the central or the poller.

This can be easily checked by launching the following command:

[root@centreon-central ~]# systemctl status firewalld firewalld.service - firewalld - dynamic firewall daemon Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled) Active: inactive (dead) Docs: man:firewalld(1)

If the firewalld process is still active, stop and disable it:

[root@centreon-central ~]# systemctl stop firewalld [root@centreon-central ~]# systemctl disable firewalld

If a configuration error is identified at this stage (for example: an erroneous IP address when creating a Poller), it will be necessary to check and correct the entry at various levels of the Centreon configuration, we will see that in a future article. 😉.

Check 2: The centreon-engine process

This step aims to check that the monitoring engine is up and running on the poller. First let’s see if the centengine process (daemon of the centreon-engine module) is acting as it should:

[root@centreon-poller ~]# systemctl status centengine centengine.service - Centreon Engine Loaded: loaded (/usr/lib/systemd/system/centengine.service; enabled; vendor preset: disabled) Active: active (running) since Wed 2021-02-10 09:56:35 CET; 1h 6min ago Main PID: 4463 (centengine) CGroup: /system.slice/centengine.service └─4463 /usr/sbin/centengine /etc/centreon-engine/centengine.cfg

Current status should be active (running)”. Ensuring the “enabled” state is active is also important, as it allows the daemon to automatically start with the system, in case of a power outage or an unexpected reboot (did someone just pull the plug?).

If status is “disabled”, activate the service at startup with the following command:

[root@centreon-poller ~]# systemctl enable centengine Created symlink from /etc/systemd/system/centreon.service.wants/centengine.service to /usr/lib/systemd/system/centengine.service.

Sometimes the centengine daemon can be reported as dead as in the following example:

[root@poller ~]# systemctl status centengine centengine.service - Centreon Engine Loaded: loaded (/usr/lib/systemd/system/centengine.service; enabled; vendor preset: disabled) Active: inactive (dead) since Wed 2021-02-10 09:56:35 CET; 22s ago Process: 22846 ExecReload=/bin/kill -HUP $MAINPID (code=exited, status=0/SUCCESS) Process: 4547 ExecStart=/usr/sbin/centengine /etc/centreon-engine/centengine.cfg (code=exited, status=0/SUCCESS) Main PID: 4547 (code=exited, status=0/SUCCESS)

Just try to force the restart: systemctl restart centengine and check the logs in /var/log/centreon-engine/centengine.log for clues about how it ended up this way. The most common reasons would be:

- SELinux configuration

- Rights on folders and files

- Missing libraries or dependencies…

Check 3: The centreon-gorgone process

As you briefly saw above, the centreon-gorgone module (carried by the gorgoned process) was introduced in Centreon 21.04, replacing the former the centcore module.

The centreon-gorgone module provides a client/server connection between central and pollers whereas centcore only knew how to copy files using SSH (pretty much).

A defaulting poller can sometimes be attributed to a fault in the centreon-gorgone module, as it is responsible for collecting the “Is running or not” status of pollers. It may happen, for example, that the monitoring is running normally, returning current and real-time data, but the poller displays as “not running.” Here’s how to run the check in that case.

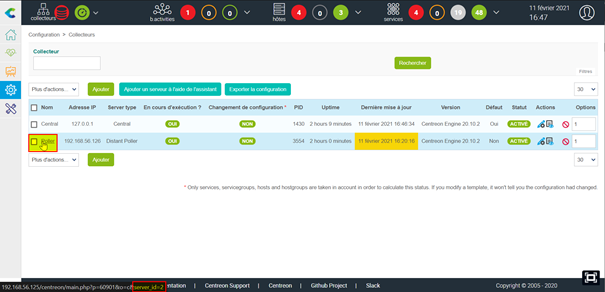

- First, identify and save the ID of the problematic poller. Go to the Configuration > pollers and hover the mouse on the poller name:

On the URL link at page bottom, the related poller’s ID will show.

- On both sides (central and poller), check that the gorgoned daemon is up and running:

[root@centreon-central ~]# systemctl status gorgoned gorgoned.service - Centreon Gorgone Loaded: loaded (/etc/systemd/system/gorgoned.service; enabled; vendor preset: disabled) Active: active (running) since mer. 2021-02-10 09:56:03 CET; 2h 10min ago Main PID: 3413 (perl) CGroup: /system.slice/gorgoned.service ├─3413 /usr/bin/perl /usr/bin/gorgoned --config=/etc/centreon-gorgone/config.yaml --logfile=/var/log/centreon-gorgone/gorgoned.log --severity=info ├─3421 gorgone-nodes ├─3428 gorgone-dbcleaner ├─3435 gorgone-autodiscovery ├─3442 gorgone-cron ├─3443 gorgone-engine ├─3444 gorgone-statistics ├─3445 gorgone-action ├─3446 gorgone-httpserver ├─3447 gorgone-legacycmd ├─3478 gorgone-proxy ├─3479 gorgone-proxy ├─3486 gorgone-proxy ├─3505 gorgone-proxy └─3506 gorgone-proxy févr. 10 09:56:03 cent7-2010-ems systemd[1]: Started Centreon Gorgone.

[root@centreon-poller centreon-engine]# systemctl status gorgoned gorgoned.service - Centreon Gorgone Loaded: loaded (/etc/systemd/system/gorgoned.service; disabled; vendor preset: disabled) Active: active (running) since Wed 2021-02-10 09:53:16 CET; 2h 13min ago Main PID: 4384 (perl) CGroup: /system.slice/gorgoned.service ├─4384 /usr/bin/perl /usr/bin/gorgoned --config=/etc/centreon-gorgone/config.yaml --logfile=/var/log/centreon-gorgone/gorgoned.log --severity=info ├─4398 gorgone-dbcleaner ├─4399 gorgone-engine └─4400 gorgone-action

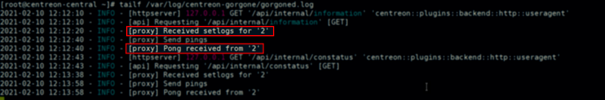

- On the central server, check that messages related to the ID are present and do not throw error messages:

- On the poller, check that the gorgoned configuration file/etc/centreon-gorgone/config.d/40-gorgoned.yaml exists and is similar to this one :

[root@centreon-poller centreon-engine]# cat /etc/centreon-gorgone/config.d/40-gorgoned.yaml name: gorgoned-Poller description: Configuration for poller Poller gorgone: gorgonecore: id: 2 external_com_type: tcp external_com_path: "*:5556" authorized_clients: - key: 3H2jXp7D7PC7OTM1ifosCO0l7iqJkf60lHWGWYnR5qY privkey: "/var/lib/centreon-gorgone/.keys/rsakey.priv.pem" pubkey: "/var/lib/centreon-gorgone/.keys/rsakey.pub.pem" modules: - name: action package: gorgone::modules::core::action::hooks enable: true - name: engine package: gorgone::modules::centreon::engine::hooks enable: true command_file: "/var/lib/centreon-engine/rw/centengine.cmd"

If not, check this link to create it again.

Check 4: Managing the centreon-broker process

Centreon-broker flows between the central and the poller are essential for a fully operational and efficient Centreon platform..

Beyond network issues (lost connection BBDO TCPTCP/5669, real-time data processing can potentially be interrupted, which will display the poller as “Not updated.” Here are the steps to check the Centreon broker.

- First, check the version consistency between the cbmod module (on the poller) and the centreon-broker module on the central. They should always be the same:

[root@centreon-central ~]# rpm -qa | grep centreon-broker centreon-broker-cbd-21.04.3-5.el7.centos.x86_64 centreon-broker-storage-21.04.3-5.el7.centos.x86_64 centreon-broker-21.04.3-5.el7.centos.x86_64 centreon-broker-cbmod-21.04.3-5.el7.centos.x86_64 centreon-broker-core-21.04.3-5.el7.centos.x86_64 [root@centreon-poller ~]# rpm -qa | grep cbmod centreon-broker-cbmod-21.04.3-5.el7.centos.x86_64

- On the central server, check that the cbd daemon is up and running:

[root@centreon-central ~]# systemctl status cbd cbd.service - Centreon Broker watchdog Loaded: loaded (/usr/lib/systemd/system/cbd.service; enabled; vendor preset: disabled) Active: active (running) since mer. 2021-02-10 09:41:01 CET; 5h 13min ago Main PID: 1529 (cbwd) CGroup: /system.slice/cbd.service ├─1529 /usr/sbin/cbwd /etc/centreon-broker/watchdog.json ├─1537 /usr/sbin/cbd /etc/centreon-broker/central-broker.json └─1538 /usr/sbin/cbd /etc/centreon-broker/central-rrd.json

Like the other vital daemons, it should appear as “active (running)” and have its 3 children processes: cbwd, cbd broker et cbd rrd.

- Check the logs: they’re located in the /var/log/centreon-broker directory. On the Central, look for the central-broker-master.log file; on the poller, look for the module-poller.log file:

[root@centreon-central ~]# ls -ltr /var/log/centreon-broker/ total 8 -rw-rw-r-- 1 centreon-broker centreon-broker 1039 5 févr. 10:43 central-broker-master.log-20210205 -rw-rw-r-- 1 centreon-broker centreon-broker 360 5 févr. 10:43 central-module-master.log-20210205 -rw-rw-r-- 1 centreon-broker centreon-broker 240 5 févr. 10:43 central-rrd-master.log-20210205 -rw-rw-r-- 1 centreon-broker centreon-broker 3610 5 févr. 10:43 watchdog.log-20210205 -rw-rw-r-- 1 centreon-broker centreon-broker 1402 10 févr. 09:41 watchdog.log -rw-rw-r-- 1 centreon-broker centreon-broker 669 10 févr. 09:41 central-broker-master.log -rw-rw-r-- 1 centreon-broker centreon-broker 200 10 févr. 09:41 central-rrd-master.log -rw-rw-r-- 1 centreon-broker centreon-broker 400 10 févr. 10:00 central-module-master.log [root@centreon-poller ~]# ls -ltr /var/log/centreon-broker/ total 4 -rw-r--r--. 1 centreon-engine centreon-engine 1330 Feb 10 11:12 module-poller.log

These files should not have recent error messages.

In older versions of Centreon we could find these kind of entries:

Error : conflict_manager : error in the main loop

If so, simply restart the centreon-broker daemon on the Central server:

[root@centreon-central ~]# systemctl restart cbd

- Last but not least, on the poller, check that the cbmod module is successfully loaded when centreon-engine starts; this part of information should be in the centengine.log file:

[root@centreon-poller ~]# grep -i cbmod.so /var/log/centreon-engine/centengine.log [1612947395] [4463] Event broker module '/usr/lib64/nagios/cbmod.so' initialized successfully

What’s Next?

In this tutorial, we did a tour of the primary checks that should be done whenever a poller seems to be in a funny state. Of course, other causes of issues exist and sometimes the solutions described here wouldn’t make the trick. Hopefully, this will however give you clues on how to start investigating.

Still blocked and running out of coffee? Keep calm & let’s visit us on our Community Slack! There will always be someone keen to give you a hand (if not running out of coffee). Suggestions are also always welcomed!

In episode 2, we will focus on operational issues: Unable to set a downtime, acknowledge an alarm and so on. Stay tuned!